Accessing Kubernetes API from a pod using kubectl proxy sidecar

Yes I know, I know that this information is actually available in the Kubernetes documentation. So when somebody reached out to me on a way to access APIServer or Kubernetes API, in general, I directed them to that documentation page. Bear in mind this person is new to k8s world only to find information on how you can use serviceAccount credentials to authenticate your container(pod) to access apiserver and other options. Which raised a lot of questions.

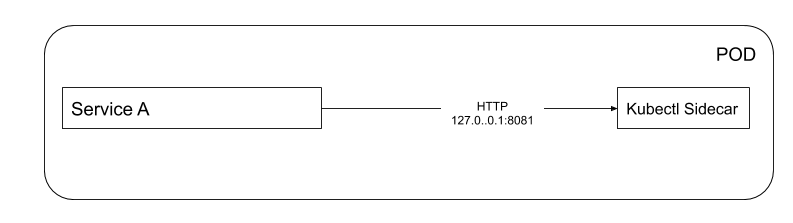

When developing the application in your machine before they containerize it and deploy in Kubernetes they used kubectl proxy and access all the API at localhost:8081. So they asked if there is a way to replicate that so I said sure you can use sidecar in the same pod that run kubectl proxy command. I could sense the confusion in their face. Like I have seen that in the documentation but I am not sure what is a sidecar and how can I create it. So that is the motivation of this post.

The assumption is you already have your cluster spinning.

POD

A pod is a collection of containers sharing a network and mount namespace and is the basic unit of deployment in Kubernetes. All containers in a pod are scheduled on the same node. You can read more about this here

Since we can have more than one container in a pod then we can have one container(service A) serve whatever purpose we have and another one running either a background process that service A can use or any services that service A can use. The other container is what we refer to as the sidecar. These co-located containers share all the resources and are wrapped together as a single manageable entity. Service A and Sidecar shares the same network thus they can communicate with each other using localhost

Example of the Service A and Sidecar in a pod

Example of the Service A and Sidecar in a pod

Show me the code fam?

I thought you never asked… insert laughing emoji. First, you need to create a docker image for service A(of course you already did). Then let create the docker image for kubectl Sidecar

FROM alpine:3.10.1

#v1.15.2 is the version that is running your cluster

ADD https://storage.googleapis.com/kubernetes-release/release/v1.15.2/bin/linux/amd64/kubectl /usr/local/bin/kubectl

RUN chmod +x /usr/local/bin/kubectl

EXPOSE 8001

ENTRYPOINT ["/usr/local/bin/kubectl", "proxy"]

So I used latest alpine Linux just to make the image more small nothing fancy we are going to do with this then download latest Kubernetes (v1.15.2) kubectl binary and run kubectl proxy while exposing port 8001 since is the default port used by kubectl proxy command . But if you are lazy like me I created an image you can use barniemakonda/sidecar:v0.0.1 .I got you, lazy people should stick together wink wink.

Since you already have the image of your service that consumes Kubernetes API at the port 8001 then let’s create pod YAML definition. In this example, I have used mhausenblas/simpleservice as my service Awhich does nothing with K8s API just for demonstration purpose.

apiVersion: v1

kind: Pod

metadata:

name: k8-demo

spec:

containers:

- name: sise

image: mhausenblas/simpleservice:0.5.0

ports:

- containerPort: 9876

- name: sidecar

image: barniemakonda/sidecar:v0.0.1

ports:

- containerPort: 8081

then you can kubectl apply -f pod.yaml after some time you can have your pod up and running then we can test if our K8s API are reachable inside our pod(k8-demo).

kubectl exec -it k8-demo – curl localhost:8001

this will list all the API endpoints that Kubernetes has. If you do run into the issue like

“forbidden: User \”system:serviceaccount:default:default\” cannot get path \”/\””

then, for now, give serviceaccount default and a cluster-admin role

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: default-rbac

subjects:

- kind: ServiceAccount

# Reference to upper's `metadata.name`

name: default

# Reference to upper's `metadata.namespace`

namespace: default

roleRef:

kind: ClusterRole

name: cluster-admin

apiGroup: rbac.authorization.k8s.io

and

kubectl apply -f rbac.yaml

That’s it, folks… Probably you will have to read more on RBAC Authorization

Warm Regards(Anyone watching the Patriot Act with Hassan).